TReDEx: Technical Report Document Extraction

Problem:

Loss Adjusting companies generate extensive technical reports in PDF format containing a complex mix of unstructured text and semi-structured data including tables, claim details, and financial figures. Manual review of these documents to extract critical information such as financial data, loss types, and location details proved time-consuming and resource-intensive.

Our client faced a significant backlog of reports in non-uniform formats. While most reports followed a basic structure with claim details on the first page and additional information across multiple pages, the lack of standardization made systematic data extraction extremely challenging.

Solution:

We developed TReDEx, an LLM-powered document parsing and data extraction framework designed to ease extraction from unstructured documents that don't follow fixed layouts or structures. The solution leverages Retrieval-Augmented Generation (RAG), combining Large Language Models' natural language understanding and instruction-following capabilities with highly optimized document retrieval algorithms.

Architecture:

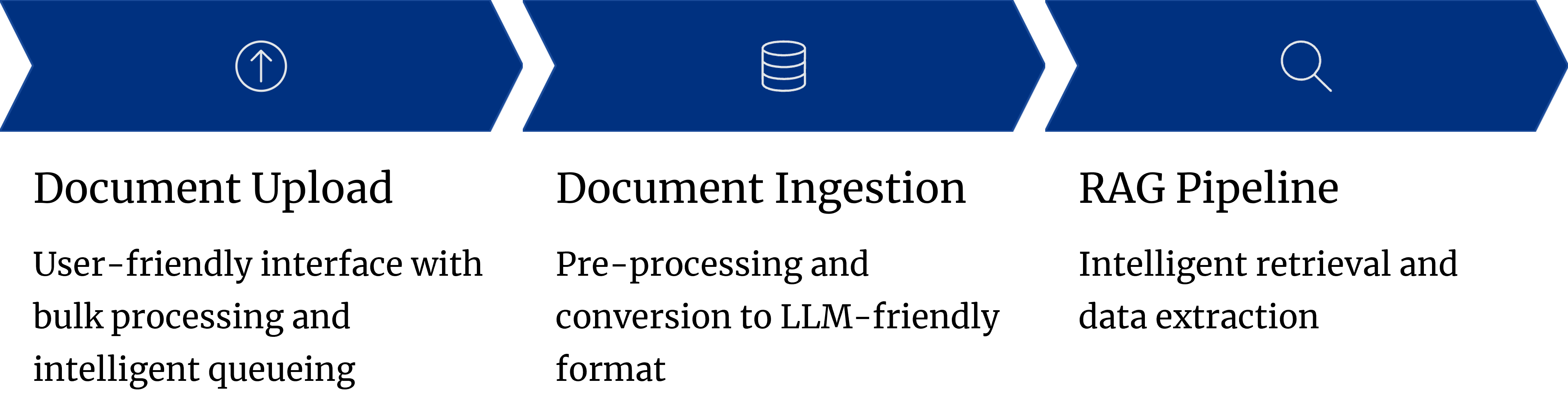

The proposed solution consists of 3 major steps in its design architecture:

- Document upload

- Document ingestion

- RAG pipeline

Document upload: While it seems simple, this step focuses on making the file upload process as user-friendly as possible, allowing multiple file uploads for bulk processing, allowing grouping of multi-stage incident report (e.g. Initial report, Final report) and a robust queueing and task scheduling for optimal use of compute resources.

Document ingestion: This is the most crucial step in the pipeline, which performs the pre-processing of the uploaded document. We convert the uploaded PDF document into an LLM-friendly format, which consists of first extracting all textual and tabular data, while maintaining context awareness by means of chunking and text summarization, through a process called ingestion. The ingested data is maintained (per-document) in a specialized database called a Vector Database (or VectorDB) for further use.

RAG pipeline: This stage performs the actual data extraction, which is based on the client’s requirements. For example, a report’s title can be extracted by means of a “query”. The RAG pipeline consists of 2 sub-stages:

- VectorDB search (Retrieval)

- LLM Summarization (Generation) This is the core philosophy of RAG, which allows a pre-trained General purpose LLM to perform data search from arbitrary sources, such as the user-uploaded documents. A customized prompt helps in improving extraction results based on the use-case.

Conclusion:

By developing this platform, our client could automate report extraction and improve the rate at which the reports were converted. We performed an analysis on accuracy of the data by manual review and found it to be >95% with only minor mistakes being spotted and no serious incorrect extraction issues.